AI self-checkout systems use cameras, sensors, and facial recognition to detect theft by monitoring shopper movements, scanning items, and flagging issues like label switching or mismatches in real time. These tools help retailers reduce losses, speed up transactions, and improve inventory tracking. However, the technology often produces false positives, wrongly accusing innocent customers, and facial recognition shows significant racial bias, with much higher error rates for people of color due to flawed training data. This leads to unfair accusations and erodes shopper trust. In 2026, addressing these biases through better algorithms, diverse data, and transparency remains critical to balancing security with fairness in retail.

Long Version

AI Self-Checkout Theft Detection Systems: Revolutionizing Retail Security Amid Bias Concerns

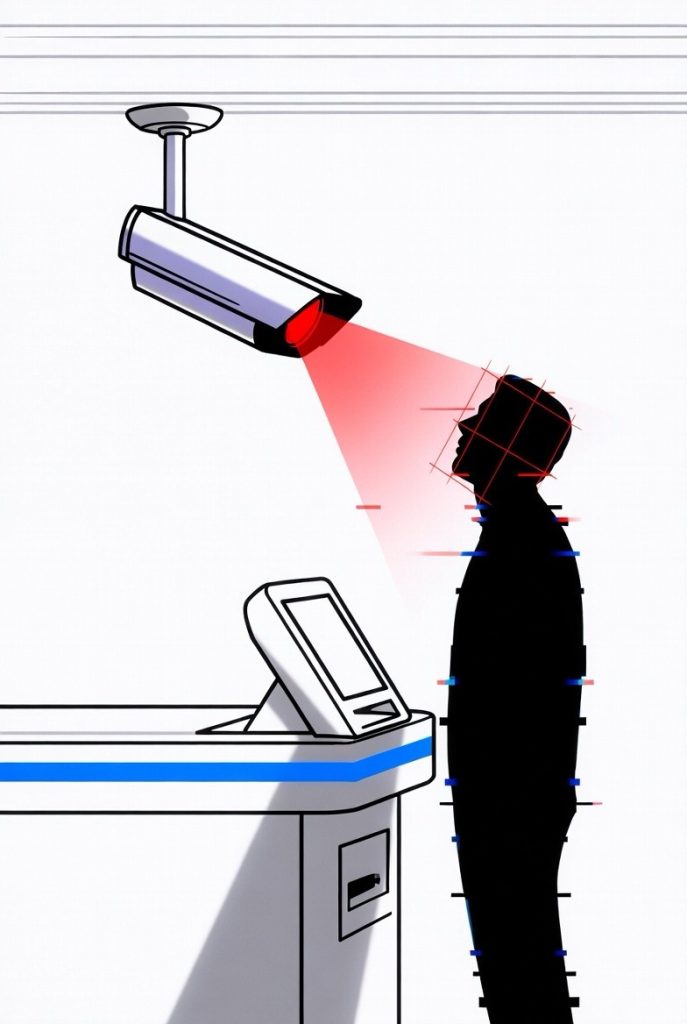

In the fast-evolving world of retail technology, AI self-checkout systems have become a staple in stores worldwide, promising efficiency and convenience. These advanced setups use cameras and sensors to detect theft by monitoring movements and scanning items, flagging label switches and mismatches while alerting staff in real time. However, they also track entrants’ faces through facial recognition retail tools, raising questions like “Can self-checkout AI be wrong about theft?” As we enter 2026, trending AI surveillance bias continues to spark debates, particularly around false accusations in self-checkout AI that disproportionately affect innocent shoppers, especially people of color due to inherent AI bias in facial recognition.

This article delves into every facet of these systems, from their operational mechanics to ethical challenges, providing a complete resource for understanding theft detection AI in retail environments.

Understanding AI Self-Checkout Systems

AI self-checkout represents the next generation of retail automation, blending machine learning with hardware to streamline transactions. Unlike traditional self-service kiosks, these systems incorporate AI fraud prevention in retail to handle complex tasks autonomously. For instance, stationary kiosks, self-scanning setups, hybrid formats, and AI-based recognition models are among the top configurations in 2026. They go beyond basic barcode scanning by using video analytics in self-checkout to observe shopper behavior, ensuring items are properly processed.

Retailers have adopted these technologies to identify produce automatically and detect theft in real time, enhancing inventory management and reducing wait times. The core appeal lies in their ability to update stock levels instantly while minimizing human intervention, making them a cornerstone of modern grocery and big-box stores.

How Theft Detection AI Works in Self-Checkout

At the heart of these systems is theft detection AI, which analyzes real-time data to prevent losses. “How does AI prevent theft at self-checkout?” is a common query, and the answer involves a multi-layered approach. AI-powered video security integrates with point-of-sale (POS) systems to spot suspicious activities, such as item mis-scanning or abandoned transactions. Machine learning algorithms process footage from overhead cameras, identifying patterns like repeat offenders or unusual movements at the checkout lane.

Sensors play a crucial role too, detecting weight discrepancies that might indicate fraud. Combined with smart analytics, these tools provide early alerts to staff, strengthening overall site security. For example, some AI offers real-time visibility to curb shrink, while broader solutions integrate auditing and rank among the top retail theft prevention devices in 2026.

Key Technologies: Cameras, Sensors, and Video Analytics

Diving deeper, video analytics in self-checkout forms the backbone of surveillance. AI cameras monitor transactions, using computer vision to verify scanned items against visual data. This helps in flagging mismatches, such as when a shopper attempts to pass off a high-value item as something cheaper. Gait recognition and face tracking add layers of identification, profiling shoppers based on behavior and past interactions.

In 2026, edge AI processing enables instant fraud detection without cloud delays, addressing vulnerabilities like organized retail crime. Tools cross-check attributes like shape and color to ensure accuracy.

Detecting Specific Frauds: Label Switching and Mismatches

A key feature is “how AI detects label switching in retail.” Systems compare visual information with scanned SKUs to catch barcode switching, a tactic nearly impossible to spot manually. Tricks such as the “banana trick” or “switcheroo”—where expensive items are labeled as cheaper ones—are flagged through real-time analysis of transaction data and shopper behavior.

AI self-checkout theft detection systems use weight verification and image recognition to process even damaged labels, prompting corrections for honest mistakes or alerting security for deliberate acts. This proactive stance reduces losses from self-checkout theft, which spiked in 2025 due to economic pressures.

Benefits for Retailers and Shoppers

These innovations offer substantial advantages. Retailers see reduced shrink through AI fraud prevention in retail, with ML-based systems providing accurate insights. Shoppers enjoy faster checkouts, personalized offers, and less staff intervention, improving the overall experience.

In grocery settings, AI reshapes loss prevention by focusing on self-checkout theft, allowing employees to prioritize customer service over monitoring. Statistics from 2025 show 50% of retailers planning AI implementations for better efficiency.

The Challenge of False Positives in AI Theft Detection

Despite benefits, “what are false positives in AI theft detection?” highlights a major flaw. These occur when non-critical events, like environmental movements, trigger alerts, leading to unnecessary interventions. In retail, false positives in facial recognition can wrongly identify individuals, as seen in systems generating alerts for innocent actions.

Overhead cameras often replay videos due to errors, frustrating shoppers and treating them as suspects. Some shoplifting prediction software has raised concerns over such inaccuracies, potentially ignoring alerts after repeated false alarms.

AI Bias in Facial Recognition: Impact on People of Color

“Is facial recognition biased in retail stores?” The evidence is clear: yes. Racial bias in retail AI stems from algorithms performing worse on darker-skinned individuals, with error rates 10-100 times higher for Black and Asian people compared to white men. This bias against people of color in AI leads to disproportionate false accusations, exacerbating “shopping while Black” issues.

“Why does AI falsely accuse people of color?” Training data often contains inaccuracies, resulting in flawed outcomes like those in systems that wrongly tagged people of color as shoplifters. Studies show 11.8-19.2% worse performance on darker-skinned images, fueling discriminatory surveillance.

Real-World Examples and Case Studies

False accusations in self-checkout AI are not hypothetical. A man was wrongly flagged by live facial recognition, turning shoppers into “walking barcodes.” Similarly, a woman was accused of stealing toilet roll by a system. Over 35 people have reported such errors, inverting “innocent until proven guilty.”

Online discussions reveal frustration: one noted AI’s bias unless programmed otherwise, while another highlighted how systems scale existing prejudices. Receipt checks at self-scan lanes further erode trust, treating customers as thieves.

Trending AI Surveillance Bias in 2026

As of 2026, trending AI surveillance bias remains a hot topic. Reports confirm facial recognition’s discrimination against communities of color, entrenching racism in tech. Warnings emphasize how it fuels racist policing, with misidentifications targeting protesters.

Recent papers expose how “fake alignment” in models hides biases behind refusals, affecting fairness evaluations. Dynamic pricing via AI profiles exacerbates issues, adjusting costs based on perceived ability to pay.

Solutions and Future Outlook

Addressing these challenges requires auditing training data, improving algorithms for diverse skin tones, and implementing ethical AI practices. Retailers should prioritize process realignment to minimize false positives and foster transparency. Future advancements promise more accurate, bias-reduced systems.

Regulations could mandate bias audits, ensuring AI enhances security without compromising equity.

Conclusion

AI self-checkout theft detection systems offer transformative potential for retail, combating self-checkout theft through sophisticated monitoring. Yet, issues like false positives in AI theft detection and racial bias in retail AI demand urgent attention to prevent harm to innocent shoppers. By balancing innovation with fairness, the industry can build trust and create inclusive shopping experiences in 2026 and beyond.