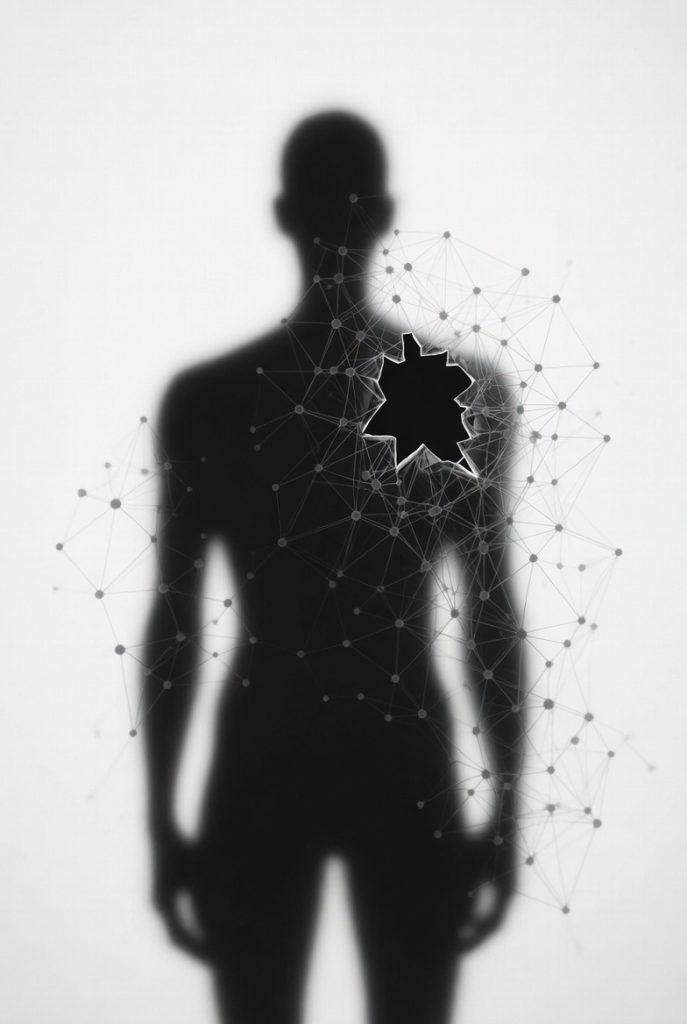

AI bias represents a critical blindspot in modern technology, where machine learning models trained on skewed data amplify racial and gender biases, perpetuating inequality in hiring, lending, and policing. These algorithms often inherit historical prejudices from imbalanced datasets and undiverse development teams, leading to discriminatory outcomes—like resume screening that disadvantages women or minorities, credit decisions that exclude certain communities, and predictive policing that disproportionately targets marginalized neighborhoods—creating self-reinforcing cycles that widen societal divides. Understanding algorithmic bias is essential, as it not only reflects existing inequities but actively deepens them through amplification effects. Fortunately, mitigating bias in AI models is achievable by auditing datasets for fairness, incorporating diverse training sources, applying debiasing techniques, and adopting transparent, ethical practices to ensure fairer, more equitable outcomes for everyone.

Long Version

AI’s Hidden Bias Blindspot: How Algorithms Perpetuate Inequality

Picture this: You’re scrolling through job listings, confident in your qualifications, yet an unseen algorithm dismisses your application because your name or address subtly signals a demographic it has learned to devalue. This isn’t a distant dystopia—it’s happening now, as machine learning models trained on skewed data quietly reinforce racial and gender biases across hiring, lending, and policing. AI bias, that insidious flaw woven into our technological fabric, acts as a blindspot, magnifying societal prejudices and widening gaps in opportunity. In this ultimate guide, we’ll delve into the mechanics of bias in AI, its origins, real-world repercussions, and robust strategies to mitigate it. Whether you’re a developer grappling with ethical dilemmas, a business leader navigating regulations, or simply someone curious about fairer tech, you’ll emerge with fresh perspectives and practical steps to foster equitable outcomes.

Unpacking AI Bias: Core Concepts and Varieties

Bias in AI isn’t just a technical glitch; it’s a reflection of human imperfections amplified by code. At its essence, algorithmic bias occurs when systems produce unfair results, often disadvantaging groups based on race, gender, age, or other traits. This stems from machine learning models that learn patterns from data, inheriting any imbalances present.

Key Definitions and Distinctions

Consider bias in AI as a spectrum. Explicit bias involves direct discriminatory rules, like hard-coding preferences for certain demographics—rare but possible in poorly designed systems. More common is implicit bias, where algorithms infer prejudices from subtle data correlations. For instance, gender bias in machine learning might emerge if training sets undervalue contributions from women, leading to lower scores in evaluations.

Racial bias in AI is particularly pervasive, as models trained on unrepresentative data can perpetuate stereotypes, such as associating certain ethnicities with higher risks. Intersectional biases compound this, affecting individuals at the crossroads of multiple identities, like women of color facing dual disadvantages.

Categories of Bias

Bias infiltrates at various stages:

- Selection Bias: When data collection overlooks diverse populations, skewing representations.

- Measurement Bias: Inaccurate metrics that favor one group, like facial recognition struggling with darker skin tones.

- Confirmation Bias: Systems reinforcing existing assumptions, creating echo chambers in recommendations.

Understanding these nuances is crucial because unchecked bias doesn’t just err—it systematically excludes, turning AI from a tool of progress into one of division.

Tracing the Roots: Where Bias Originates in AI Systems

AI doesn’t invent prejudices; it absorbs them from its environment. Machine learning models trained on skewed data are like sponges soaking up historical inequities, then squeezing them out in decisions.

Data Imbalances as the Primary Culprit

The foundation of most AI issues lies in training data. If datasets draw from biased sources—such as employment records reflecting past gender disparities—the model learns to replicate them. Recent analyses highlight how generative AI, now producing a significant portion of new data, risks entrenching these flaws further if not curated carefully.

Diverse training sources are vital, yet often insufficient. For example, in global datasets, underrepresentation of non-Western perspectives can lead to models that perform poorly for vast populations, exacerbating global inequalities.

Human Elements in AI Development

Developers and data scientists introduce their own blindspots. Teams lacking diversity might overlook how an algorithm could disadvantage underrepresented groups. Studies show humans even mirror AI’s biases, adopting skewed recommendations without question, perpetuating a cycle.

Moreover, design choices matter: prioritizing speed over fairness can embed shortcuts that favor majority patterns.

Broader Systemic Factors

Beyond the code, environmental impacts play a role. AI infrastructure, like data centers, often burdens marginalized communities with resource strain, indirectly widening divides. Economic pressures also influence, as cost-cutting might skip thorough bias checks.

Addressing these roots demands a holistic view, recognizing AI as part of a larger societal ecosystem.

The Far-Reaching Consequences: AI’s Role in Deepening Divides

When algorithms perpetuate inequality, the effects ripple outward, entrenching disparities in critical areas. Bias in AI doesn’t just misjudge individuals; it shapes economies, justice systems, and daily lives.

Reinforcing Inequality in Hiring

AI in recruitment promises objectivity but often delivers the opposite. Racial and gender biases in hiring algorithms have led to systems penalizing resumes with “women’s” indicators or ethnic names. Recent research reveals AI tools ranking applicants lower based on perceived race or gender, with humans willingly following these biased cues.

Real scenarios include models excluding older candidates inferred from resume details, or favoring certain educational backgrounds tied to privilege. The outcome: reduced diversity in workplaces, stifling innovation and perpetuating wage gaps. Intersectional impacts hit hardest, where biases overlap to exclude multiply marginalized groups.

To quantify:

- Economic Toll: Biased hiring contributes to persistent unemployment in minority communities.

- Feedback Effects: Homogeneous hires create even more skewed data for future models.

As AI reshapes job markets, it risks locking in old inequities unless intervened upon.

Exacerbating Financial Exclusion in Lending

In finance, algorithmic bias amplifies historical redlining. Models assessing creditworthiness from biased data assign higher risks to minorities, resulting in denials or unfavorable terms. This cycle traps communities in poverty, limiting access to homes or businesses.

Emerging studies link this to broader wealth disparities, where AI-driven decisions transfer opportunities away from the underserved.

Undermining Justice in Policing and Beyond

Predictive policing tools, fed on arrest data skewed by over-policing, target minority areas disproportionately, creating self-reinforcing loops. Similar issues arise in healthcare, where biased algorithms misdiagnose based on unrepresentative training, or in education, where AI grading favors certain cultural expressions.

Age bias in AI adds another layer, denying opportunities to older users in job matching or services. Globally, AI’s backend operations exploit low-wage labor in developing regions, hardwiring economic divides.

These examples illustrate how AI perpetuating inequality isn’t abstract—it’s a tangible force widening societal chasms.

Mechanisms of Amplification: How AI Turns Bias into Systemic Harm

AI’s power lies in scale, but this magnifies flaws. Algorithms don’t merely reflect inequality; they accelerate it through dynamic processes.

The Amplification Loop

Skewed inputs lead to biased outputs, which then inform new data, creating vicious cycles. In social media, for instance, biased recommendations can amplify divisive content, deepening polarization.

Recent insights suggest AI could sharpen merit-based divides, benefiting high-skilled workers while marginalizing others, akin to a digital Matthew effect where the rich get richer.

Societal and Economic Dimensions

On a macro scale, AI influences inequality phases: initial adoption favors the affluent, mid-stage automation displaces low-wage jobs, and long-term entrenchment solidifies power imbalances. Environmental justice ties in, as AI operations disproportionately affect vulnerable areas.

Balanced views note AI’s potential to democratize access, like in education, but only if biases are addressed proactively.

Psychological and Behavioral Impacts

Humans interacting with biased AI adopt those prejudices, as seen in studies where people defer to AI’s discriminatory hiring suggestions. This moral cover allows inequality to persist under a veneer of objectivity.

By 2026, without checks, these dynamics could tip toward irreversible divides, underscoring the urgency of intervention.

Proven Strategies: Mitigating Bias for Fairer AI Outcomes

Mitigating bias in AI models is achievable through layered approaches, turning potential pitfalls into opportunities for equity.

Data-Centric Interventions

Auditing datasets for AI fairness is step one: scrutinize for imbalances and diversify sources. Techniques like re-sampling or generating synthetic balanced data help. Incorporate global perspectives to avoid cultural skews.

Algorithmic and Design Adjustments

Employ fairness-aware strategies during development:

- Pre-Processing: Clean data to remove biases before training.

- In-Processing: Adjust algorithms to prioritize equity, using adversarial debiasing.

- Post-Processing: Tweak outputs for balanced results.

Explainable AI ensures decisions are traceable, allowing challenges to unfair outcomes.

Organizational and Regulatory Frameworks

Diverse teams spot issues early. Form oversight committees, conduct pilot tests, and audit regularly. Emerging regulations, like phased implementations of high-risk AI classifications, mandate these steps.

Best practices include:

- Transparency Mandates: Disclose data sources and decision logic.

- Ethical Training: Equip developers with bias recognition tools.

- Continuous Monitoring: Update models as societal norms evolve.

Innovations like verifiable audits and decentralized verification enhance trust.

Adopting these transforms AI from a bias amplifier to an equality enabler.

Looking Ahead: The Path to Ethical and Inclusive AI

As AI advances, so do safeguards. Trends toward specialized, accountable models promise reduced biases. Regulatory landscapes, with unified frameworks emphasizing human-centric design, signal progress.

Challenges persist, like balancing innovation with oversight, but collaborative efforts—across tech, policy, and society—offer hope. By prioritizing fairness, AI can bridge divides rather than widen them.

Wrapping Up: Harnessing AI for a Just Future

AI’s hidden bias blindspot demands our attention, as we’ve explored through its definitions, sources, impacts in hiring, lending, and policing, and amplification mechanisms. Yet, with strategies like auditing datasets, diversifying sources, and implementing fairness techniques, we can mitigate these issues for fairer outcomes.

Actionable insights: Audit your data rigorously, build diverse teams, and stay abreast of regulations. Embrace ethical AI not as a constraint but as a catalyst for inclusive innovation. In doing so, we ensure technology serves humanity equitably, fostering a world where algorithms uplift rather than undermine.